How To Use JDBC addBatch Method with MySQL for Improved Performance

When you have to deal with a large amount of data to be operated on mySQL databases, the performance can be dramatically improved using a few simple tweaks.

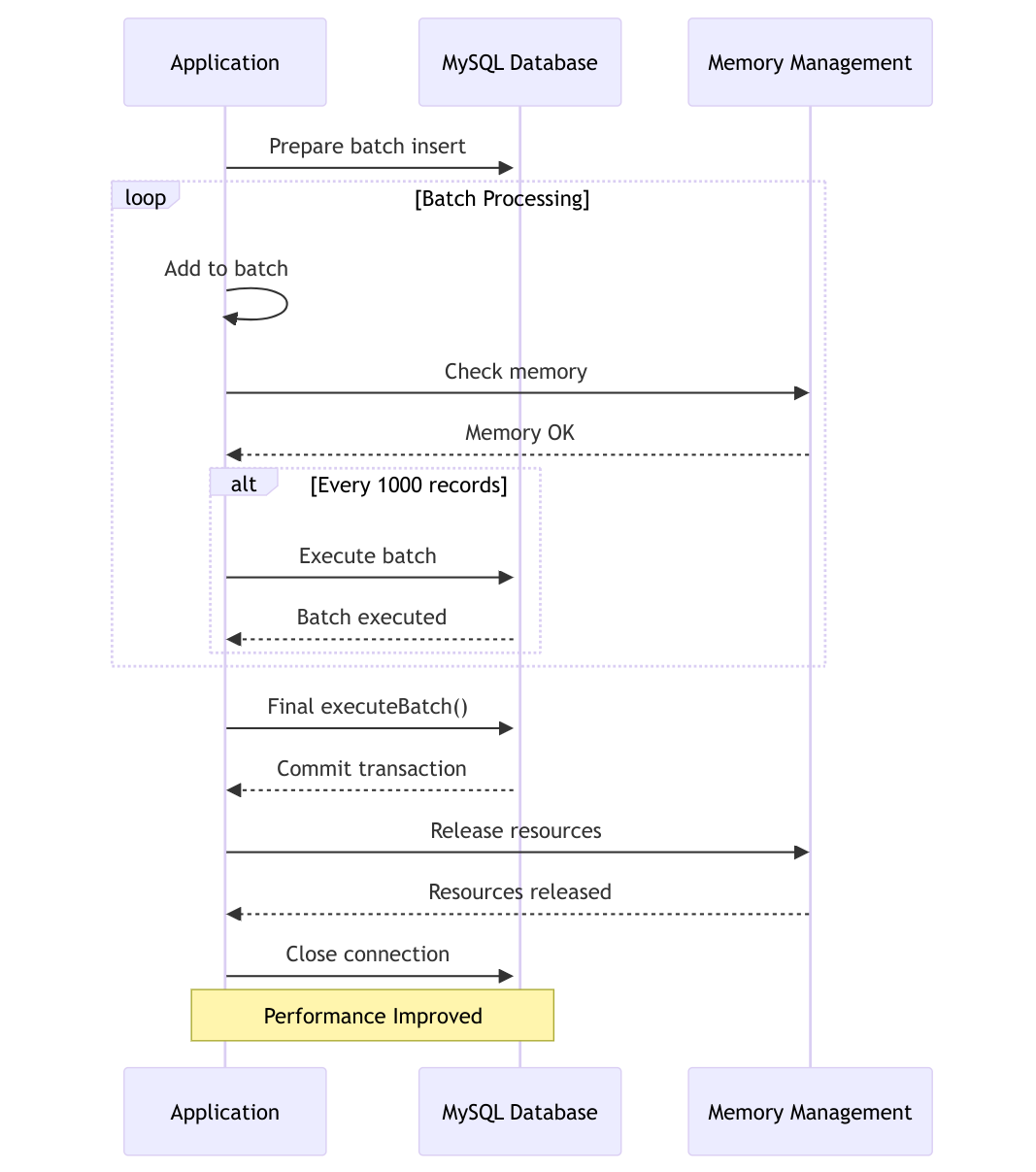

First of all you have to use Statement.addBatch/executeBatch instead of simple execute methods. For each added batch, the jdbc driver will store in local memory and when the executeBatch is invoked, all the batches are sent at once to the database. This will result in an huge speed improvement.

When you deal with such operations you should keep an eye one the memory allocated to the java process. The JDBC driver(Connector/J) will use the heap memory to build the batch until is executed. In order to make sure you don't run out of memory you have to executeBatch method from time to time.

When you deal with mySQL database you can make and additional speed improvement. This can be applied for the cases when you have to insert values in the database(which is the probably the case because 90% of large amount data operations are imports).

When you configure the JDBC connection with "rewriteBatchedStatements=true", the driver takes the statements in the form "INSERT INTO foo VALUES (...)" and rewrites them as "INSERT INTO foo VALUES (...), (...), (...)". You can see below benchmarks which records 10x performance increase. You have to make sure you have the latest Connector/J version to to squeeze the best performance out of it(at least 5.1.8).

Connector/J has a feature where the driver can take prepared statements of the form "INSERT INTO foo VALUES (...)", and if configured with "rewriteBatchedStatements=true", can re-write batches of them to the form "INSERT INTO foo VALUES (...), (...), (...)". This is a performance win on a few fronts, because of reduction in latency (remember, MySQL in general doesn't have a "batch" form of prepared statement parameter bindings, so each parameter set usually needs to be sent as a separate INSERT), and because of optimizations of handling "multivalue" INSERT in the server itself.

// Import necessary SQL and JDBC packages

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.PreparedStatement;

import java.sql.SQLException;

public class DatabaseOptimization {

static protected String INSERT_GAME_SQL =

"INSERT INTO tablename(name, category, title, description, metascore) VALUES(?, ?, ?, ?, ?)";

public void batchInsert(int limit) throws SQLException {

Connection connection = null;

try {

// Load the MySQL JDBC driver to initiate a connection

Class.forName("com.mysql.jdbc.Driver").newInstance();

// Connection string includes rewriteBatchedStatements=true for optimizing batch inserts

String connectionString = "jdbc:mysql://?rewriteBatchedStatements=true";

String user = "";

String passwd = "";

// Establishing a connection with auto-commit turned off for batch execution

connection = DriverManager.getConnection(connectionString, user, passwd);

connection.setAutoCommit(false);

// Preparing the SQL statement for batch insert

PreparedStatement statement = connection.prepareStatement(INSERT_GAME_SQL);

// Loop through data and add to batch

for (int i = 0; i < limit; i++) {

// Example data assignment, replace with actual data source

statement.setString(1, "value1");

statement.setString(2, "value2");

statement.setString(3, "value3");

statement.setString(4, "value4");

statement.setInt(5, 134);

statement.addBatch();

// Execute batch after every 1000 records to manage memory and performance

if (i % 1000 == 0 && i > 0) {

statement.executeBatch();

connection.commit(); // Commit the transaction to make changes permanent

}

}

// Execute any remaining batches not executed in the loop

statement.executeBatch();

connection.commit(); // Final commit to ensure all data is saved

} catch(SQLException | ClassNotFoundException | InstantiationException | IllegalAccessException e) {

// Attempt to rollback changes in case of error to maintain data integrity

if (connection != null) {

connection.rollback();

}

throw e; // Rethrow the exception for further handling

} finally {

// Cleanup: Close the PreparedStatement and Connection to free resources

if (connection != null) {

try {

connection.close();

} catch (SQLException e) {

// Handle possible SQLException on close

}

}

}

}

}

The code cosists of the following sections:

- Loading JDBC Driver and Connection Setup: The first step involves loading the MySQL JDBC driver and setting up a connection to the database. Importantly, the connection string includes rewriteBatchedStatements=true, which instructs the JDBC driver to optimize the batch insert operation.

- Disabling Auto-Commit: Disabling auto-commit (connection.setAutoCommit(false)) is crucial for batch processing. This setting prevents each insert operation from being automatically committed to the database, allowing us to manually control when commits happen. This significantly improves performance by reducing the number of round-trips to the database.

- Preparing and Executing Batches: In the loop, we prepare each batch of inserts by setting the parameters for the INSERT statement and then adding it to the batch with statement.addBatch(). Executing the batch every 1000 records (statement.executeBatch()) and then committing (connection.commit()) helps in managing memory usage and enhances performance. This is because large numbers of uncommitted records can consume substantial memory and potentially lead to performance degradation or errors.

- Error Handling and Rollback: Proper error handling with a rollback in the catch block ensures that if any part of the batch insert fails, all changes made in the current transaction are undone, maintaining the integrity of the database state.

- Cleanup: Finally, closing the PreparedStatement and Connection in the finally block is essential to free up database resources, which can prevent resource leaks that might degrade the performance of your application over time.

Conclusion

MySQL performance can be improved when inserting large amount of data by using rewriteBatchedStatements. As always software development requires improvement. Looking for flexibility and advantages of different database systems I started to use also sqlite for small projects. If you're looking to transition to a lighter, file-based database system for your project, you can check the next post on converting your database from MySQL to SQLite.

How To Use JDBC addBatch Method with MySQL for Improved Performance

Maximizing MySQL Database Performance for Large Data Operations using JDBC addBatch Method

How To Use JDBC addBatch Method with MySQL for Improved Performance

Maximizing MySQL Database Performance for Large Data Operations using JDBC addBatch Method